Highlight

Eye movements while viewing narrated, captioned, and silent videos

Achievement/Results

Rutgers University graduate student Nicholas M. Ross, supported by the National Science Foundation Integrative Graduate Education and Research Traineeship program, examined eye movements of people viewing narrated, captioned and silent videos. Video has become a primary means of conveying information in all kinds of settings, including schools, jobs, or homes. Information in videos can be conveyed either through visual images and graphics, or through narration, where narration is in the form of an audio stream or captions. Narration presents an interesting problem for the viewer: it both supplements the visual information, while at the same time has the potential to take attention from the visual information. Examination of eye movements can reveal the effects of narration on the strategies used to view the video, as well as how people choose to divide their time between captions and the pictorial portion of the display. Videos are inspected by a type of eye movement known as saccades. Saccades are fast and intermittent eye movements that take the line of sight to areas of interest in the visual scene in an effortless, but purposeful, way. Decisions about how to plan saccades in space and time play a crucial role in apprehending the content of natural scenes. Much of the previous work on eye movements in scene viewing was done on static scenes and has only recently been extended to dynamic scenes. One limitation of prior work on eye movements while viewing videos has been the absence of sound or narration in the stimuli tested.

The main goal of this project was to study strategies of reading narrated and captioned videos. Captions present special challenges because gaze has to shift continually between video and text. Strategies of saccadic guidance should, ideally, minimize loss of information from either the captions or the video portion of the display. Eye movements of participants viewing 2 minute video clips from documentaries were recorded. Viewing was motivated by multiple choice questions after each clip. Videos were either accompanied by narration (either audio, captions, or redundant audio and captions) or had no narration. While viewing videos without written captions, the line of sight tends to remain quite close to the center of the display. The preference to maintain the line of sight near the center can be seen in the representative heat-map plots on the left side of Figure 1, taken from the results of one of the participants. Overall, most eye positions fell in the central 13% of the area of the display screen.

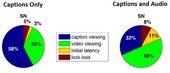

The preference to remain near the center is believed to represent an efficient strategy since most of the important details are centrally located. In addition, the similarity between the upper graph (audio narration condition) and lower graph (no narration) on the left side of Figure 1 shows that eye movements were not affected much by the presence of audio narration. This impression was verified by quantitative analyses of the duration of the pauses between consecutive saccades. Pause duration was about 400 ms, regardless of the presence of narration. The presence of captions dramatically altered the strategies used to view the videos (graphs on right side of Figure 1). The line of sight was drawn to the lower portion of the display, which contained the captions. Remarkably, the strong preference to read the captions persisted even with audio narration present (bottom right). Figure 2 shows in detail how viewing time was apportioned with the captioned videos. When cations were the only form of narration present, this representative subject devoted more than half of the time (58%) to reading the caption. When redundant audio narration was present, 32% of the time was spent reading. Analyses of the eye movements showed that the reduced portion of time reading when the audio was present (32% vs. 58%) was not due to faster reading, but rather to skipping portions of the captions.

Any time spent in the caption area comes at the cost of viewing the rest of the display. Thus, the decision to read the captions in the presence of redundant audio narration is puzzling. Why sacrifice viewing the video in order to read the caption instead of just relying on the audio stream? There are several possibilities. It may be that reading is faster than listening, however, this does not account for why anyone spend time reading the text when all the information was in the audio stream. Perhaps it is the case that it is easier to use the eye to split attention between two visual sources, video and caption, than between a visual and an auditory source. Finally, the strategy to read in the presence of audio may reflect preferences or learned habits to pay attention to text, preferences that are beneficial in most situations, such as reading road signs or instructions. These habits may require too much effort and monitoring to override, even when they are not necessarily optimal. These same preferences were observed in all six of the participants studied. The results, including the central viewing locations (Figure 1) and the large portion of time spent reading even in the presence of redundant audio (Figure 2), point to the continual need for human observers to make decisions about how to allocate and manage their resources. The examination of the eye movements shows that these decisions, made anywhere from one to three times each second, reflect the struggle to make the best use of available information without overburdening our decision-making tools. Decisions may not always appear to us to be optimal, perhaps because the decisions take into account the cost or effort of constantly having to choose where to look. It is often easier to rely on the old habits rather than to learn new skills, even at the cost of momentary loss of information. (Ross & Kowler, 2013, Journal of Vision, 13(4):1. doi: 10.1167/13.4.1.

Address Goals

Discovery: The highlighted research advances our understanding of saccadic strategies in dynamic scenes. Furthermore, it introduces narration as a powerful and useful tool for studying eye movement strategies, which may shed light on ways to optimize instructional material presented in video form. By examining the characteristics of narration (text or audio) that lead to higher retention and better understanding of visual information, we can improve not only educational videos, but also signage used on roadways and GPS systems. This work also has the potential to improve devices for the hard-of-hearing by suggesting that the position of the caption in the frame is important for being able to efficiently switch between narration and important parts of the scene. As new technologies emerge, such as gaze-controlled interfaces, it is important to be aware of preexisting biases that may weaken the usefulness of such devices in certain settings (e.g., when a lot of text is present).

Learning: This project required the use of complex computer vision algorithms as well as a basic understanding of the linguistic aspects of the project. Thus the project fostered interdisciplinary collaboration and learning, preparing the next generation of scientists to embrace and contribute to interdisciplinary projects in the future.